As he watches a video of a fisherman get tugged and pulled into the water, Fahad, 16, is confident his eyes do not deceive him.

“That looks real to me, I think,” he says.

“Nothing’s off about it, and I think the floor was pretty natural as well.”

His confidence is misplaced. The video he is watching was generated using AI.

“That’s AI? Oh … oh, I’m terrible at this.”

Fahad was surprised to learn a video he thought was real was in fact AI-generated. (BTN High)

He might say he is terrible, but he is not alone. As Sasha, 17, watches the same AI fisherman, she falls into the same trap.

“I feel like that looked pretty realistic. I’m going to say real,” she says, incorrectly.

“Oh my gosh, this is not going well.”

Sasha says it is really hard to tell what is real and what is not. (BTN High)

Fahad and Sasha are part of an experiment.

We showed four high school students 21 short videos, 10 of them real and 11 AI-generated.

After each of the videos, we asked the students to decide if what they watched was real or AI.

Before the test even started, the students were not exactly confident in their ability to spot a fake.

“I think it probably comes up in my feed all the time, and I don’t even notice, so I don’t know. I guess we’ll see how it goes,” Sasha says.

Iris, 17, is equally hesitant in her AI radar.

Iris did not feel confident when it came to spotting AI videos. (BTN High)

“I feel like I haven’t really been that good in noticing AI in the past, so I don’t think that has really changed.”

The results are in, and the students barely do better than chance.

In total, they were correct 67 per cent of the time.

When it came to the AI-generated videos, the students were fooled into thinking it was a real video a third of the time.

They were sceptical of real videos too, incorrectly guessing that 33 per cent of them were AI-generated.

Loading…

“It’s hard to distinguish between when there are real videos that look so unreal and when there are fake videos that … are designed to look so real,” Sasha says.

“It’s really hard to try and work out what’s real and what’s fake.“

She was able to correctly distinguish the videos 70 per cent of the time.

“I still feel like I’m not very confident in doing it,” says Sasha after taking the test.

A shift in AI content

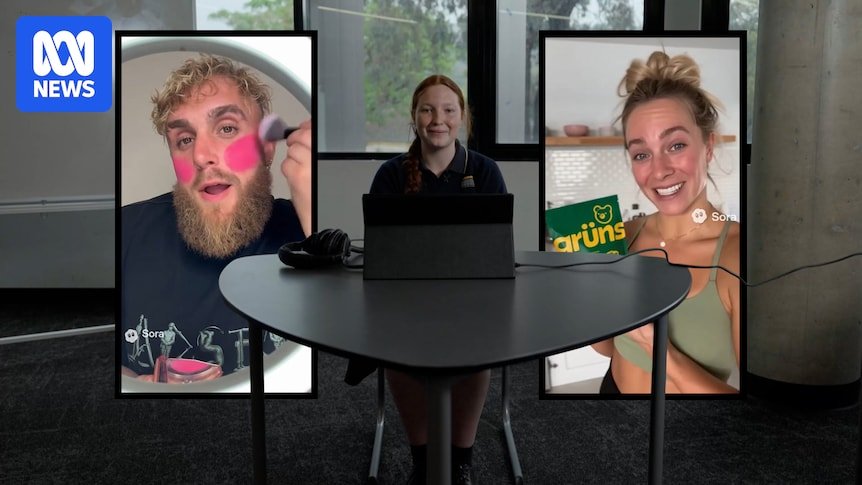

Last month, Open AI, the company behind ChatGPT, released a new version of its AI video generator, Sora.

At the same time, it launched a new social media platform where everything is AI, and people can upload their faces and allow people to deepfake them.

Jeremy Carrasco, who runs a social media account helping people spot AI videos, says since its launch, other social media platforms, such as Instagram, have been flooded with AI-generated videos.

“If you feel like you haven’t seen an AI video today, you probably don’t have good AI vibes. You probably don’t have a good vibe checker,” he says.

Jeremy Carrasco says social media is saturated with AI-generated videos. (BTN High)

Carrasco, also known as ShowTools.AI, says the latest iteration of Sora is specifically designed to create viral videos.

“Previously, a lot of AI slop really felt very sloppy because it wasn’t very interesting, but Sora 2 can make interesting things kind of by default.

“It has a certain virality kind of baked into it, where it’s obviously trained to, or it emerged as an ability for it to be kind of funny and quite interesting-looking without needing to put a lot of work into it.”

Loading…

Since Sora’s release, it has copped a lot of criticism. At first, there seemed to be barely any restrictions on copyrighted material, something the companies tried to fix with an update.

Sora has also been criticised for allowing users to use the likenesses of dead people.

AI-generated videos of Michael Jackson have gone viral, and in an online statement Zelda Williams, the daughter of the late Robin Williams, asked people to stop sending her videos of her dad.

Carrasco says AI companies are passing the buck of responsibility and the proliferation of deepfakes on social media is deeply concerning.

“They [AI companies] are not at all concerned and pass off responsibility on the social harms, and they think that that’s just necessary,” he says.

“They feel like this is inevitable, therefore they’re actually doing us a favour by letting us all talk about it and deal with it, as if it’s not accelerationist and as if friction doesn’t matter.

Loading Instagram content

“But in reality, having friction to creating deep fakes, having friction to creating things that are misleading to people, that friction is important.

“They’ve just lowered the ground floor so now anyone can do it.

“So while they might think that they’re doing it safely, people, I think, intuitively have a sense that normalisation of deepfakes is extremely dangerous.”

Sam Altman, the CEO of OpenAI, which released Sora, says it is continuing to tweak safety features.

In our task, we specifically asked the students to look out for AI videos. Carrasco says when people are scrolling in their own time, you would expect them to be far less vigilant.

“The ‘for you’ feed has always been about kind of turning off, so it’s just not the place that people want to turn on and start detecting,” he says.

“People are frustrated and they’re sick of AI slop in their feeds. And again, I want to recognise that there will be a lot of people who are happy to watch AI content, but I don’t think we know how many people yet.”

Gio, 16, who took part in our test and was right an admirable 86 per cent of the time, says his feeds have been overrun with AI-generated content.

Gio says AI content is dominating his social media apps. (BTN High)

“I think AI in general has taken over every single app I use, like social media apps, like Instagram,” he says.

“I can tell which videos are AI or not, but sometimes … it’s scary to know that … I might not have caught that this video is AI or not.”

What to look out for

Carrasco says safeguarding yourself against AI videos is twofold: detection and prevention.

Detection is about looking for specific things in videos that give away that it is AI-generated.

Loading Instagram content

“A lot of the things that are going viral on social media right now are security cameras, or body cameras, things that don’t actually rely on good video quality,” Carrasco says.

“But the other thing is that, you still need to look at the background, see if things look weird … and just say, like there are inconsistencies here.

“People can intuitively feel like something’s off and then check further, but it’s really about training that skill.”

Videos produced by Sora will often display a Sora watermark on their videos; however, there are various ways to remove it.

The other thing to look out for is “AI noise” or “AI static”, where background or secondary features of videos will often appear wavy or glitchy.

Carrasco says you should always question the context of the video and what actually happens in it: would a cat really jump like this or would people really be filming the Louvre being robbed on their phone?

If you notice your feed is filled with AI-generated content, Carrasco says you can take steps to prevent it from popping up.

“If you want to keep your entertainment real, which I think is a rational thing to do, then you can start unsubscribing to pages that are doing AI videos,” he says.

“I know that a lot of people just scroll their ‘for you’ page and because of the way that algorithm works, they end up seeing a lot of their favourite creators over and over.

“But if you haven’t specifically followed them, now’s the time to do it because you want to probably start transitioning over more into a follower model so that you know that you can have at least a feed of creators that you trust that are real people.”

Leave a Reply